/by apr on this-is-what-I'm-doing-now/

During my long (I wish I could say short) life as a programmer I've been writing the same kind of applications over and over and over.

Every time a given application goes live, I immediately start writing it again under my budget in what I think could be a more efficient, modular and elegant way.

That's how I've written jPOS, previous versions were written in C, then C++, then back in C, then Java.

During the last 10 years or so most of my projects have been mostly either transaction switches or credit/debit/stored-value/gift-card/loyalty system.

We have some pretty impressive production grade systems, from small acquirers and card issuers in small countries to large financial institutions as well as Fortune 500 and Fortune 100 companies processing massive transaction loads.

Almost all the projects enjoy the jPOS-EE modular architecture, but in most situations we end up implementing what the customers have in place in terms of protocols involved.

There are usually inbound protocol specs in place, sometimes outbound specs, customer-specific routing and reporting/extract requirements and business logic, and that's what we have to implement.

In order to reuse code as much as possible, we have the jPOS TransactionManager that is a great general purpose component that fosters the reuse of a lot code (the so called transaction 'participants'), but we still get to implement large chunks of customer-specific code, we still need to read tons of specs. We read specs and we implement those specs, but there's no such a thing as the jPOS specs.

In order to change that and in an attempt to move ourselves to the center of the boxing ring (so to speak), we have now our own specs. And by not reinventing the wheel, our effort has been very small as the new ISO-8583 version 2003 spec is really nice and well thought.

Most things that were previously done using esoteric private fields are now very well specified as part of the standard, so there's not too much to think (no think is good), one just have to follow the experts. But we don't live in a perfect world, so we need to support legacy protocol versions (both inbound and outbound).

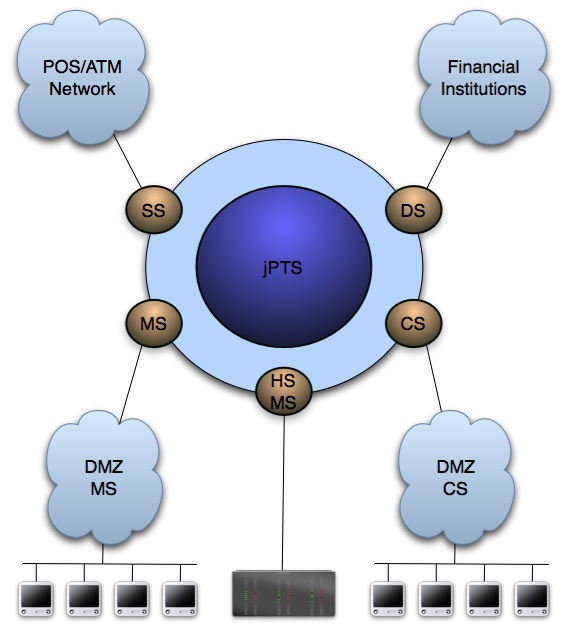

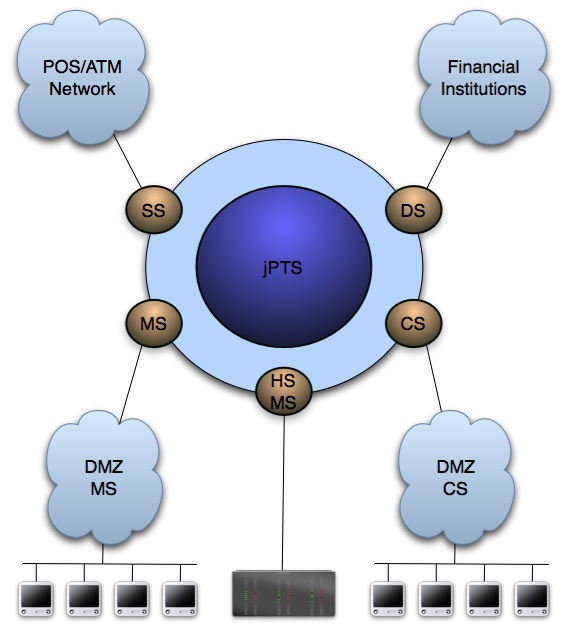

Our approach is to use what we call "Stations" that speak the jPOS Transaction Switch (jPTS) internal message format (jPTS IMF) based on ISO-8583 version 2003 and to whatever external protocol is involved.

We currently have five stations types:

- Source Stations (SS) are typically POS/ATM drivers or multi-lane POS servers receiving transactions using ISO-8583 variants or proprietary protocols and converting them to jPTS' Internal Message Format.

- Destination Stations (DS) are used to convert jPTS IMF based transactions into messages suitable for a given destination endpoint. Those can be either a different variant of the ISO-8583 protocol or any other proprietary protocol.

- Monitoring Stations (MS) can be configured to receive copies of messages sent from and to SSs and DSs either as part of the transaction (synchronous mode) or via store and forward (asynchronous mode). MSs can be used to monitor system health, feed in-house reporting/settlement subsystems as well as active and passive fraud prevention subsystems.

- Control Stations (CS) can use jPTS IMF to initiate several system jobs such as setting the current capture date, forcing a cutover, modifying system configuration parameters, reporting a hot card or hot bin range, etc.

- HSM Stations (HSMS) provide an abstraction layer that enables the communication with different hardware-based (and software-based) security modules brands and models.

So we basically have two tightly coupled projects, jPTS (the switch) and jCard (the CMS that implements a native jPTS IMF and can act as a jPTS Destination Station).

Both take advantage of miniGL and we are currently working in the user interface where we have some good news too, we have now the jPOS Presentation Manager (I'll talk about it soon - think dynamic scaffolding to provide CRUDL support for most commonly managed entities).

While we still have a lot of work to do and a lot of features to add before we can release this projects, we believe these applications could be extremely helpful, even as a reference implementation, to teams working on their own jPOS and jPOS-EE projects. In the same way a picture is worth a thousand words, studying a working application (specially a good one) is worth a thousand programmer's guides. We are currently working in a sneak preview program that will allow you to embrace this technology now and use it as part of your projects, either as a reference or as its core engine. Interested parties can register here.

Episode #1

Episode #1

Ditto. See the

Ditto. See the